Matrix — Catalyst for the AI Big Bang (Part 2)

The Mechanism of MANTA

MANTA — MATRIX AI Network Training Assistant is a distributed auto-machine learning platform built on Matrix’s highly efficient blockchain. It is an ecosystem of AutoML applications based on distributed acceleration technology. This technology was originally used in the image categorisation and 3D deep estimation tasks of computer vision. It looks for a high-accuracy and low-latency deep model using Auto- ML network searching algorithms and accelerates the search through distributed computing. MANTA will have two major functions: auto-machine learning and distributed machine learning.

Auto-Machine Learning — Network Structure Search

Matrix’s auto-machine learning adopts a once-for-all (OFA) network search algorithm. Essentially, it builds a supernet with massive space for network structure search capable of generating a large number of sub-networks before using a progressive prune and search strategy to adjust supernet parameters. This way, it can sample from the supernet structures of various scales but whose accuracy is reliable, and this can be done without the need to micro-adjust the sampled sub-networks for reducing deployment costs.

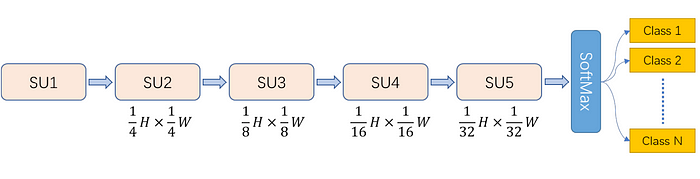

Take image categorisation for example. Below is an illustration of the network structure MANTA adopts. This structure consists of five modules. The stride of the first convolutional layer of each module is set to 2, and all the other layers are set to 1. This means each SU is carrying out dimensional reduction while sampling multi-channel features at the same time. Finally, there is a SoftMax regression layer, which calculates the probability of each object type and categorizes the images.

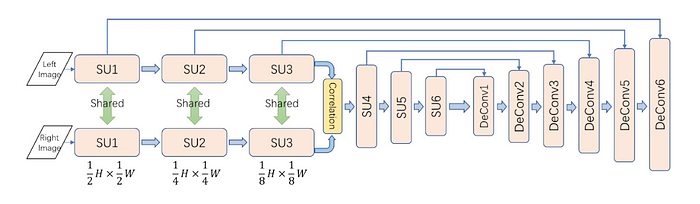

Meanwhile, the picture below is an illustration of the network structure MANTA adopts for deep estimation. There are six searchable modules in this structure. The first three SUs are mainly used to carry out dimensional reduction and subtract the multi-scale feature image from the left and the right views. There is a correlation layer in the middle for calculating the parallax of the left and the right feature images. The last three SUs are for further subtracting the features of the parallax. Subsequently, the network magnifies the dimension-reduced feature image through six transposed convolutional layers and correlates to the feature image produced by the six former SUs through a U link to estimate deep images of different scales.

Distributed Machine Learning — Distributed Data/Model Parallel Algorithm

MANTA will use GPU distributed parallel computing to further accelerate network search and training. Distributed machine learning on MANTA is essentially a set of distributed parallel algorithms. These algorithms support both data parallel and model parallel for acceleration. The purpose of distributed data parallel algorithms is to distribute each batch of data from every iteration to different GPUs for forward and backward computing. A GPU uses the same sub-model for each iteration. A model parallel is different from a data parallel in that for every iteration, MANTA allows different nodes to sample different sub-networks. After they each complete their gradient aggregation, the nodes will then share their gradient information with each other.

Distributed Data Parallel Algorithm Workflow

1. Sample four sub-networks from the supernet

2. Each GPU on each node of every sub-network carries out the following

Steps:

(1) Access training data at a number according to the set batch size;

(2) Broadcast the current sub-network forward and backward;

(3) Accumulate gradient information that is broadcast backward.

3. Aggregate and average the gradient information accumulated by all GPUs and broadcast them. (Carry out All Reduce.)

4. Every GPU uses the global gradient information it receives to update its supernet;

5. Return to step 1.

Distributed Model Parallel Algorithm Workflow (Taking Four Nodes for Example)

1. GPUs located on the same node sample the same sub-network model. We have four different sub-network models in total.

2. All GPUs located on the same node carry out the following steps:

(1) Access training data at a number according to the set batch size;

(2) Broadcast the sub-network sample from the node forward and backward;

(3) Calculate the gradient information that is broadcast backward;

(4) GPUs on the same node aggregate and average the gradient information and store the result in the CPU storage of the node.

3. The four nodes each aggregate and averages the gradient information it gets in step 2 and broadcast the result to all GPUs;

4. Every GPU uses the global gradient information it receives to update its super net;

5. Return to step 1.

New Public Chain of the AI Era

MANTA will take Matrix one step further towards a mature decentralised AI ecosystem. With the help of MANTA, training AI algorithms and building deep neural networks will be much easier. This will attract more algorithm scientists to join Matrix and diversify the AI algorithms and services available on the platform. Competition among a large number of AI services will motivate them to improve so that users will have more quality services to choose from. All of this will lay a solid foundation for the soon-to-arrive MANAS platform.

Secondly, MANTA will lower the entry barrier to AI algorithms. This will no doubt attract more developers and corporate clients to the Matrix ecosystem, which will further solidify its value and boost the liquidity of MAN.

Finally, as more people use MANTA, there will be greater demand for computing power in the Matrix ecosystem. For miners, this will bring them lucrative rewards and in turn attract more people to become computing power providers for Matrix, further expanding the scale of its decentralised computing power distribution platform. All this will help Matrix optimize marginal costs and provide quality computing power at a better price for future clients, and this forms a virtuous cycle.

MANTA is a vital piece in Matrix’s blueprint. It will fuel Matrix’s long-term growth and set the standard for other public chain projects.

Matrix AI Network leverages the latest AI technology to deliver on the promise of blockchain.

FOLLOW MATRIX:

Website | Github | Twitter | Reddit | Facebook | Youtube | Discord

Telegram (Official) | Telegram (Masternodes)

Owen Tao (CEO) | Steve Deng (Chief AI Scientist)